|

1 | 1 | # Inference Endpoints |

2 | 2 |

|

3 | | -Inference Endpoints offers a secure production solution to easily deploy any Transformers, Sentence-Transformers and Diffusers models from the Hub on dedicated and autoscaling infrastructure managed by Hugging Face. |

| 3 | +Inference Endpoints offers a secure production solution to easily deploy any model from the Hub on dedicated and autoscaling infrastructure managed by Hugging Face. |

4 | 4 |

|

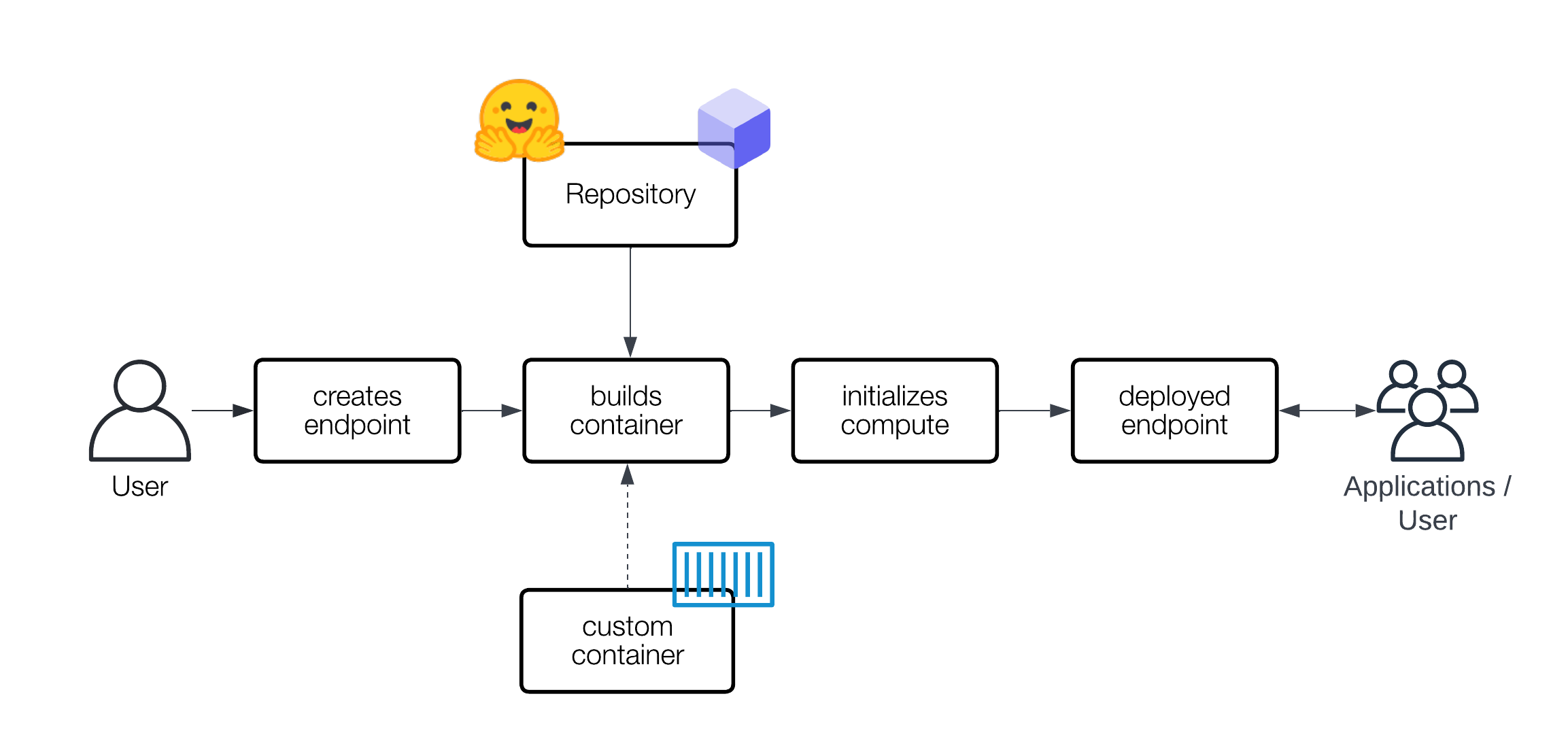

5 | 5 | A Hugging Face Endpoint is built from a [Hugging Face Model Repository](https://huggingface.co/models). When an Endpoint is created, the service creates image artifacts that are either built from the model you select or a custom-provided container image. The image artifacts are completely decoupled from the Hugging Face Hub source repositories to ensure the highest security and reliability levels. |

6 | 6 |

|

7 | | -Inference Endpoints support all of the [Transformers, Sentence-Transformers and Diffusers tasks](/docs/inference-endpoints/supported_tasks) as well as [custom tasks](/docs/inference-endpoints/guides/custom_handler) not supported by Transformers yet like speaker diarization and diffusion. |

| 7 | +Inference Endpoints support all of the [Transformers, Sentence-Transformers and Diffusers tasks](/docs/inference-endpoints/supported_tasks) as well as [custom tasks](/docs/inference-endpoints/guides/custom_handler) not supported by Transformers yet. |

8 | 8 |

|

9 | | -In addition, Inference Endpoints gives you the option to use a custom container image managed on an external service, for instance, [Docker Hub](https://hub.docker.com/), [AWS ECR](https://aws.amazon.com/ecr/?nc1=h_ls), [Azure ACR](https://azure.microsoft.com/de-de/services/container-registry/), or [Google GCR](https://cloud.google.com/container-registry?hl=de). |

| 9 | +In addition, Inference Endpoints gives you the option to use a custom container image managed on an external service, for instance, [Docker Hub](https://hub.docker.com/), [AWS ECR](https://aws.amazon.com/ecr/?nc1=h_ls), [Azure ACR](https://azure.microsoft.com/en-gb/products/container-registry/), or [Google GCR](https://cloud.google.com/artifact-registry/docs?h%3A=en). |

| 10 | + |

| 11 | +Inference Endpoints support all container types, for example: vLLM, TGI (text-generation-inference), TEI (text-embeddings-inference), llama.cpp, and more. |

10 | 12 |

|

11 | 13 |  |

12 | 14 |

|

@@ -34,6 +36,7 @@ In addition, Inference Endpoints gives you the option to use a custom container |

34 | 36 | * [Access and view Metrics](/docs/inference-endpoints/guides/metrics) |

35 | 37 | * [Change Organization or Account](/docs/inference-endpoints/guides/change_organization) |

36 | 38 | * [Deploying a llama.cpp Container](/docs/inference-endpoints/guides/llamacpp_container) |

| 39 | +* [Connect Endpoints Metrics with your Internal Tool](/docs/inference-endpoints/guides/openmetrics) |

37 | 40 |

|

38 | 41 | ### Others |

39 | 42 |

|

|

0 commit comments