-

Couldn't load subscription status.

- Fork 0

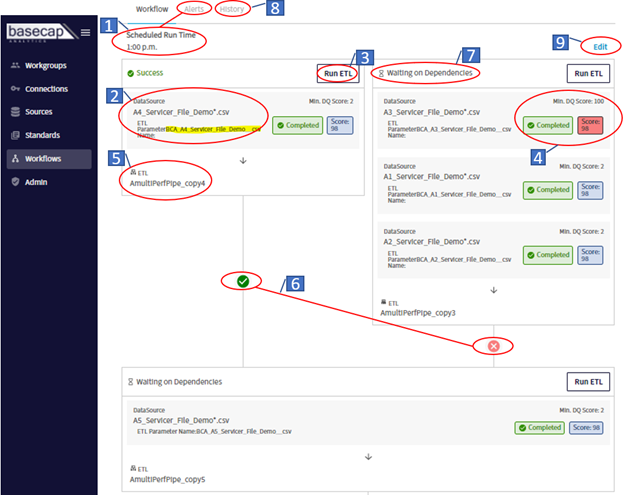

Workflow Components and Operation

The Workflows feature allows for ETLs to be automatically triggered with dependency on multiple combinations of data sources surpassing predefined quality score thresholds.

The bulleted numbers below correspond with the numbered callouts in the below screenshot.

The Schedule Run Time is used in conjunction with the Alerts. Alerts can be set to send specified users an email notification if the last ETL in a Workflow doesn’t trigger by the designated Scheduled Run Time. In the above screenshot, the last ETL in the example Workflow would be ‘AmultiPerfPipe_copy5’.

When creating Workflows, you must select Data Sources that are already set up in the platform. These Data Sources will also be found in the Sources tab in the blue left-hand navigation pane. The highlighted portion in the above screenshot is the parameter that is used when setting up an ETL so the ETL knows which file to process when triggered.

- If you click on the parameter name which is highlighted, it will automatically save to your clipboard.

- This parameter name can also be found in the Settings tab in the Manage screen for any Source.

The Run ETL button will manually trigger the designated ETL. In our above instance, this would trigger the ETL circled in #5.

When a Data Source has a report run, the status will display as “Complete” in green (as shown in the screenshot). The Data Quality Score for that report run will also display. The ‘Min DQ Score’ is a threshold that a source’s quality score must surpass in order to auto-trigger the ETL. In the above example, the report score did not surpass the threshold which is indicated by the Score being highlighted in red.

- If a Data Source is used multiple times in either the same Workflow or in different Workflows, the Min DQ score will be the same for every instance where that Data Source is present within all Workflows. In other words, if the same Data Source is found in two different Workflows, and you change the Min DQ Score on one of them, it will automatically update the other with the same Min DQ Score.

This shows the ETL Pipeline that is designated to trigger if the following conditions are met:

-

To have an ETL Auto Trigger:

- A report is generated on a new Batch file that hasn’t yet auto-triggered the ETL (Batches further explained in #8),

- The report’s data quality score surpasses the Min DQ Score threshold, and

- Any dependent conditions are met. In the above instance, there are no other dependent conditions for the circled ETL ‘AmultiPerfPipe_copy4’, so this ETL did auto-trigger. In the example just to the right, there is a dependency on three DataSource files that need to complete successfully with scores that surpass their respective Min DQ Scores before the ETL ‘AmultiPerfPipe_copy3’ would auto-trigger.

-

If the Run ETL button mentioned in #3 were pressed, it would trigger the designated ETL

Where some ETLs are dependent on other ETLs completing first, dependency lines and icons are displayed. In the above example, there are two ETLs that must run successfully before the ETL ‘AmultiPerfPipe_copy5’ would auto-trigger. The example above shows that one of these conditions has been met, noted with a green check icon, and another one has not been met, noted with a red ‘X’.

- There are four reasons why there could be a red X to indicate why a dependency hasn’t been met.

- All files for a particular batch haven’t yet been run for the ETL to trigger.

- The Min DQ Score was not surpassed by one of the Data Sources, therefore the ETL would not auto-trigger.

- The platform has triggered an ETL, (either automatically or manually) and is waiting for the ETL to finish processing before updating the status, which if successful, would turn the red ‘X’ into a green check, (status described in # 7).

- The ETL triggered and failed during processing, in which case the status would say ‘Failed’.

The Status indicates if any dependencies are still required before an ETL is triggered, or what the completion status of an ETL pipeline run is after the ETL has completed processing. The different statuses are:

- ‘Waiting on Dependencies’ indicates that either:

- A dependent report hasn’t yet run,

- A dependent report hasn’t surpassed the Min DQ Score (which is the case in the above example screenshot), or

- An ETL has triggered and is awaiting completion. One of the following two statuses will display once an ETL has completed.

- ‘Failed’ will display as the status if an ETL pipeline has failed, (for example an ETL Pipeline triggered in Azure Data Factory and failed for any reason, that status will display in the platform as ‘Failed’)

- ‘Success’ will display if the ETL pipeline completed successfully. A status of Success is required for a dependent ETL to auto-trigger.

The History tab displays a list of all the Batch files for that particular Workflow. Data Sources that are part of a workflow must have an asterisk wildcard (*) in the name, otherwise it cannot be selected in a Workflow. This asterisk wildcard generally replaces a date that changes from day-to-day. The characters that this asterisk wildcard is replacing denote the Batch.

- If a Data Source is set up with the following name ‘ServicerFile*’ the below three files would be picked up by the platform. What is replacing the asterisk (*) will be what designates the Batch

- ServicerFile10.21.2022

- ServicerFile10.22.2022

- ServicerFile10.23.2022

When a file is picked up by the platform and a report is generated, if it’s a new Batch, (e.g. a new date) that the platform hasn’t recorded before, the UI (User Interface) will reset all of the Workflow statuses and Quality Scores to reflect the Statuses and Quality Scores for only reports that have run for that Batch.

Only files with the same characters that are replacing the asterisk (*) will be able to be part of the same Batch. For example, say the below two sources have been set up in the Platform and have been built into the same Workflow.

- Source 1 name in Platform: ABC*

- Source 2 name in Platform: XYZ*

If files with the below two names are picked up by the platform, they would be part of the same Batch.

- ABC12_15_2021

- XYZ12_15_2021

If files with the below two names are picked up by the platform, they would not be part of the same Batch. Two separate Batches would be created. Whichever of the two files was picked up by the platform last would be the Batch that the UI would reflect.

- ABC12_15_2021

- XYZ12_16_2021

Batch history does reset after a certain number of occurrences. For monthly files, it resets after ten, and for daily files, it resets after one month. If a batch has already auto-triggered an ETL, that ETL will not be able to be triggered by that same batch again.

Clicking the Edit button allows a user to change, add, or remove any of the Data Sources, ETLs, and Min DQ Score thresholds, which includes adding additional dependencies.

For information purposes only.